Getting a Read on Responsible AI

There is great promise and potential in artificial intelligence (AI), but if such technologies are built and trained by humans, are they capable of bias?

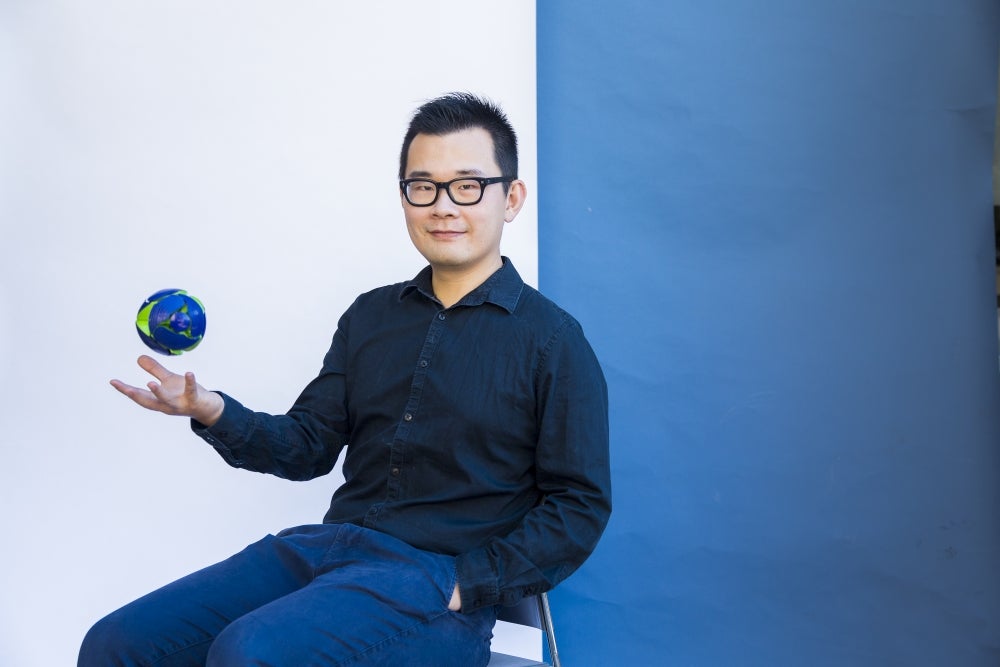

Absolutely, says William Wang, the Duncan and Suzanne Mellichamp Chair in Artificial Intelligence and Designs at UC Santa Barbara, who will give the virtual talk “What is Responsible AI,” at 4 p.m. Tuesday, Jan. 25, as part of the UCSB Library’s Pacific Views speaker series.

“The key challenge for building AI and machine learning systems is that when such a system is trained on datasets with limited samples from history, they may gain knowledge from the protected variables (e.g., gender, race, income, etc.), and they are prone to produce biased outputs,” said Wang, also director of UC Santa Barbara’s Center for Responsible Machine Learning.

“Sometimes these biases could lead to the ‘rich getting richer’ phenomenon after the AI systems are deployed,” he added. “That’s why in addition to accuracy, it is important to conduct research in fair and responsible AI systems, including the definition of fairness, measurement, detection and mitigation of biases in AI systems.”

Wang’s examination of the topic serves as the kickoff event for UCSB Reads 2022, the campus and community-wide reading program run by UCSB Library. Their new season is centered on Ted Chiang’s “Exhalation,” a short story collection that addresses essential questions about human and computer interaction, including the use of artificial intelligence.

Copies of “Exhalation” will be distributed free to students (while supplies last) Tuesday, Feb. 1 outside the Library’s West Paseo entrance. Additional events announced so far include on-air readings from the book on KCSB, a faculty book discussion moderated by physicist and professor David Weld and a sci-fi writing workshop. It all culminates May 10 with a free lecture by Ted Chiang in Campbell Hall.

First though: William Wang, an associate professor of computer science and co-director of the Natural Language Processing Group.

“In this talk, my hope is to summarize the key advances of artificial intelligence technologies in the last decade, and share how AI can bring us an exciting future,” he noted. “I will also describe the key challenges of AI: how we should consider the research and development of responsible AI systems, which not only optimize their accuracy performance, but also provide a human-centric view to consider fairness, bias, transparency and energy efficiency of AI systems.

“How do we build AI models that are transparent? How do we write AI system descriptions that meet disclosive transparency guidelines? How do we consider energy efficiency when building AI models?” he asked. “The future of AI is bright, but all of these are key aspects of responsible AI that we need to address.”